So why to build your own CMS? Well, I had two basic needs:

- Static HTML pages

- Generated menus

When I don't want a server side CMS why not write all pages with an HTML editor like the one in Seamonkey? I used to do that for my old homepage and it was a torture to update the menu. The obvious solution is that software takes care for the menus.

When talking about software the next question has to be if there is existing software that solves the problem. I've found out that there is even a class of software that addresses the problem. It's called Desktop CMS. Unfortunately the software I've found was only for Windows XP. Not quite a solution for a Linux user like me. Poor Man's CMS was the only exception that I've found to this before starting the project. As I've written the basic core I've found that doing an apt-cache search CMS or an apt-cache search blog may reveal some alternatives. But I haven't checked whether they are configurable enough to adjust the output to my needs.

The configuration of existing software was another point for writing my own software. I thought that is will be much easier to write software that is mastered on the needs of my homepage than to configure an general purpose software for my needs. For the first version I can confirm that writing the software was the easiest part. Designing the layout was much harder.

Design

The visual layout

The first step in designing a custom CMS is to design the homepage itself. This means to decide what kind of content will be shown, how will it be organized, and how will the page look like. I've decided that I would like to have an articles and a blog section. The main menu should be on the top imitating tabs which have become quite popular since the last update of my old homepage.

In the blog section there should be an overview of all blog entries where for each entry the title, the day written, and a preview is shown. The full content is accessible via a link at the end of the preview. The preview of the entries is ordered from the youngest to the oldest where only 10 previews are shown per page. To navigate between the different preview pages there are links a the bottom of the page.

In the article section there is only a list showing for each article the title, the date when it was created/last updated, a description, and a link to the article itself. The order in which the article appear should be arbitrary by me.

The input

So until now there was a lot of work done but nothing related to writing a CMS. The same had to be done when using an existing software. But how will all the output be generated and how will the content be put in the CMS? Well writing an editor is not an option. Using wiki-, a self-made-syntax or event XML isn't an option too. The easiest thing is to use an existing HTML-editor and adjust the raw content to fit in the defined layout.

The basic idea is to use a template file that contains all the static layout things that should be fix for every page and put in the content from the other HTML files. Grails uses with SiteMesh a similar approach. So in the template.html is a placeholder, I've chosen ${content}, that will get the content between the body-tags of the HTML files. Finding the body tag correctly in the wild is difficult as you need to consider comments and attributes. Writing software for yourself makes it easy when you have no need for body-tags in comments or with attributes. Then the tags are very easy to find and you just take the string between these two tags.

The static content like blog entries, articles, and e.g. the about me page are handled with this simple approach. The next question is how to generate the blog overview. Here a title, a post date, and a preview is needed. The title could be taken from the title tag in the HTML files. For the preview I've decided to use the first paragraph of the entry. Other systems let you write an explicit description or take a fix amount of words/characters or something else. But I think that I will be able to write a descriptive first paragraph so that the reader will see enough information that he/she wants to click the Read more button. Now only the date is missing. Taking it from the file is insecure because that date may change when I have to move the homepage data to a new hard-drive. Putting it in the HTML file is also risky as an editor may decide to delete any comments/meta data. The file-name in contrast doesn't face these problems. So all blog entries now start with YYYYMMDD. The drawback is that I could only release one blog post per day. Looking back on how much I've previously posted on the web I'm pretty sure that I will not have to post two articles on the same day. In the seldom circumstances that I write two articles on a day I will have enough time to wait for the next day to post it.

The schema that worked for the blog overview couldn't be used for articles as the need an explicit order and a description that couldn't be taken from the first paragraph. The description is easy as Seamonkey offers to put a description of the page into the meta tags of the HTML files. You just have extract it. But unlike blog entries articles get updated and there is still the issue of the order. The simplest solution that crossed my mind first was to create a text file where I write down a marker, the file name, the date, and whether the page was created at the date or updated. Each position gets its own line. The order in the text-file is the order in the overview.

The creation process

The creation process is pretty simple. Load the template, load the HTML file, extract required information from the HTML file, replace variables in the template with gained information and voila there's the generated page. But what to do with it?

Putting it directly online

isn't an option as the program may fail in the generation process.

Obviously it needs to be saved to the local hard-drive. But before

deciding where exactly lets move on and ask what's next? Delete

everything online and upload all newly generated content? Or generating

only the content that needs to be newly uploaded? For this solution you

need to know what is online during generation. Doing this fast and

correct isn't easy. So generating the while page is the way to go. But

upload the whole site event though only some pages have changed? That's

a wast of traffic. So after the generation of a page it must be

accessible for the next generation of it. My solution is that there is

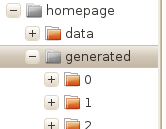

a generated directory and

the version of the homepage is the name of the sub-folder within this

homepage.

Putting it directly online

isn't an option as the program may fail in the generation process.

Obviously it needs to be saved to the local hard-drive. But before

deciding where exactly lets move on and ask what's next? Delete

everything online and upload all newly generated content? Or generating

only the content that needs to be newly uploaded? For this solution you

need to know what is online during generation. Doing this fast and

correct isn't easy. So generating the while page is the way to go. But

upload the whole site event though only some pages have changed? That's

a wast of traffic. So after the generation of a page it must be

accessible for the next generation of it. My solution is that there is

a generated directory and

the version of the homepage is the name of the sub-folder within this

homepage. Having the current and new version of the homepage available it is pretty easy to determine the files that haven't changed. Then you delete all the other files on the web-space and download the rest of the new page. This is ok as long as all newly generated versions are uploaded or nobody defaces the website. How to secure against this without wasting traffic? Check the file names and there sizes? These information need little traffic and it is pretty unlikely that there were no changes in number of files, their sizes, and their name, between the last and the last but one version of the homepage. The some is true for defacement's.